Monday 26 February 2018

WebStock 2018 notes

Andy Budd - The accidental leader (high performaing teams)

* processes don't make good products, people do

* build the team people want to be on

* create the best environment to do great work

* schedule time to experiment and innovate

* execute at pace

* remove organisational barriers

* remove the trash from jobs

* Google Aristotle Project

* psychological safety; and other stuff

how this applies to us

* execute at pace -> reducing long lead times/WIP -> org barriers/trash

* are we high performing team? where can we be better

Scott Hansleman - Open source pancreas

* a lot of engineers building the same systems - v complicated

* social meda to share, building on other parts; building the pancreas

* extracting data from closed source apps

how this applies to us

* how to extract data to send to GPs (results?), how to insert data from GPs (referrals?)

* who owns the data? it's about the patients but they can't access it. What apps can access.

Haley Van Dyck - USDS

* healthcare fail -> US Digital Service

* 86B on IT projects, 94% failure (inc over budget/late), 40% never released

* focused teams helping depts

* only appointee to stay in admin change O->T

1. never let a crisis go to waste

2. turn risk into asset

take other peoples' risk

3. design for failure

have something/incremental steps

4. mind the culture change trap

deliver results to change culture; don't try to change people

how this applies to us

* unfortunately we are probably one of the orgs they would be helping

* use some of their techniques? small, focused teams?

Lee Vinsel - The innovation fetish

(historian)

* innovation speak vs actual innovation

* little evidence of disruptive innovation (but amazon or netflix)

* 67% of software effort is in maintenance / not design (or innovation)

* failure to consider maintenance when building & maintenance jobs have lower status

how this applies to us

* more effort to prevent maintenance tasks?

* retire old stuff? - pas integrations?

* get our ALM happening?

using online tests to help with the diagnosis of ADHD

The application consists of a collection of tests written in html/javascript to collect data on things like attention span, impulse resistance, planning etc that can help in a diagnosis for ADHD. The front end is a .net MVC app with results presented via SQL Server Reporting Services (and linked to from the app). The tests were all designed by one of the awesome doctors here. He even coded a lot of them. Maybe I can retire soon. The system went live at the start of 2018.

Goals:

- allow testing for ADHD without having to have a psychologist in the room (spend their time elsewhere)

- faster testing than paper versions

- establish a set of norms for NZ - we currently don't have any so have to compare our kids to others e.g. US kids

- store the results electronically, once we have norms can show side-by-side with a result set to compare

Example test 1: Response inhibition (not clicking on things you shouldn't). When a 'P' shows and you should click the RESPONSE button. When an 'R' shows you shouldn't click the response button. The Ps show a lot so you get in a pattern of clicking & preventing yourself from clicking on the Rs can be challenging.

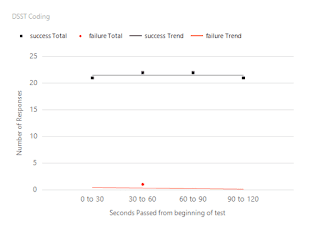

results for this show how often the client avoided clicking the R and also the speed of the responses with a trend line to show if it got better or worse over time

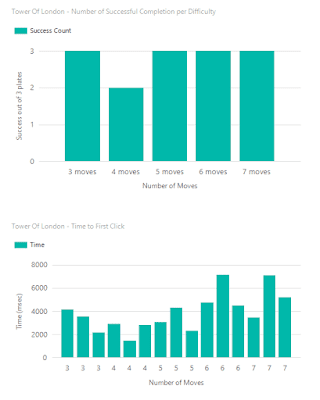

Example test 2: Tower of London

Moving stacks of objects around to match a given pattern with a limited amount of move available. Tests planning before jumping in an clicking everything. There are three sets of each difficulty (i.e. 3 tests where there are 3 moves to complete, 3 tests where there are 4 moves etc)

Results show how long it took for the first click (how much planning you did) to happen and also the successes.

Example test 3: Recalling a sequence of digits. The sequence gets longer as long as the client is getting the values correct.

Example results for coding (a symbol is displayed, the client has to map that to a letter and then click the correct button). Shows how many mappings were done correctly, how many wrong, and associated trend lines (did it get better or worse over time)

play sound through javascript - using flash over html

CITRX! Why do you play choppy sound sometimes when using IE/html5? Bad citrix.

SoundManager can play sounds over flash; if no flash then it will fallback to using html5.

http://www.schillmania.com/projects/soundmanager2/

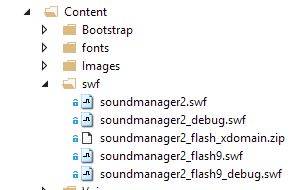

Put all the sound manager support stuff somewhere in your project

Reference soundmanager2.js from your HTML

Setup the Sound Manager; put the path to where you put the support stuff:

Use the Sound Manager to play a sound

Citrix!

SoundManager can play sounds over flash; if no flash then it will fallback to using html5.

http://www.schillmania.com/projects/soundmanager2/

Put all the sound manager support stuff somewhere in your project

Reference soundmanager2.js from your HTML

Setup the Sound Manager; put the path to where you put the support stuff:

$(document).ready(function() {

soundManager.setup({

// where to find flash audio SWFs, as needed

url: '@Url.Content("~/Content/swf/")',

preferFlash: true,

onready: function() {

// SM2 is ready to play audio!

}

});

});

Use the Sound Manager to play a sound

var mySound = soundManager.createSound({

url: baseUrl + "Content/Voice/Hello.mp3"

});

// ...and play it

mySound.play();

Citrix!

javascript benchmarker

how do you know if your javascript is being impacted by something on the client? CPU, memory, disk io. I don't know. Maybe set a timeout for a second and then when the timeout is triggered see how close to a second it was? The more variance from a second the more impact something is having on the browser/js. Then just keep looping that timeout.

benchmarker is probably the wrong term, but life is hard.

Seems mostly the thing that effects JS is CPU. To test:

https://blogs.msdn.microsoft.com/vijaysk/2012/10/26/tools-to-simulate-cpu-memory-disk-load/

http://download.sysinternals.com/files/CPUSTRES.zip

benchmarker is probably the wrong term, but life is hard.

Seems mostly the thing that effects JS is CPU. To test:

https://blogs.msdn.microsoft.com/vijaysk/2012/10/26/tools-to-simulate-cpu-memory-disk-load/

http://download.sysinternals.com/files/CPUSTRES.zip

function Benchmarker() {

var times = []; // records the time at each interval check

var interval = 1000; // the amount of time (in msec) to wait between time checking

var warningVarianceThreshold = 100; // the amount of time (in msec) as a variance from the expected time that counts as a warning

var alertVarianceThreshold = 150; // the amount of time (in msec) as a variance from the expected time that counts as an alert

var warningCount = 0;

var alertCount = 0;

var stopFlag = false;

var intervalCallback = null; // a callback function called each time the interval occurs

// this method will run at a given interval and record the time

// will compare the current time to the last run time and establish a variance to the expected difference

function checkInterval() {

if (stopFlag) {

return;

}

var d = new Date();

var currentSeconds = d.getTime();

if (times.length > 0) {

var lastSeconds = times[times.length - 1];

var timeChanged = currentSeconds - lastSeconds;

//console.log(currentSeconds + "; " + timeChanged);

var variance = Math.abs(timeChanged - interval);

if (variance >= alertVarianceThreshold) {

alertCount++;

} else if (variance >= warningVarianceThreshold) {

warningCount++;

}

if (typeof intervalCallback === "function") {

var data = getData();

data = $.extend(data, { lastVariance: variance });

intervalCallback(data);

}

} else {

//console.log(currentSeconds);

}

times.push(currentSeconds);

setTimeout(function () { checkInterval(); }, interval);

}

this.getData = function() {

var data = { interval: interval, intervalCount:times.length, warningVarianceThreshold: warningVarianceThreshold, alertVarianceThreshold: alertVarianceThreshold, warningCount: warningCount, alertCount: alertCount };

return data;

}

this.start = function () {

checkInterval();

}

this.stop = function () {

stopFlag = true;

}

this.getAlertCount = function () {

return alertCount;

}

this.getWarningCount = function () {

return warningCount;

}

this.setIntervalCallback = function(callback) {

intervalCallback = callback;

}

}

Collect client information via javascript

function ClientEnvironmentData() {

var data = [];

data.push({ Key: "navigator.appName", Value: navigator.appName });

data.push({ Key: "navigator.userAgent", Value: navigator.userAgent });

data.push({ Key: "navigator.appVersion", Value: navigator.appVersion });

data.push({ Key: "navigator.appCodeName", Value: navigator.appCodeName });

data.push({ Key: "navigator.platform", Value: navigator.platform });

data.push({ Key: "navigator.oscpu", Value: navigator.oscpu });

data.push({ Key: "navigator.cookieEnabled", Value: navigator.cookieEnabled });

data.push({ Key: "navigator.doNotTrack", Value: navigator.doNotTrack });

data.push({ Key: "navigator.language", Value: navigator.language });

data.push({ Key: "navigator.onLine", Value: navigator.onLine });

data.push({ Key: "navigator.product", Value: navigator.product });

data.push({ Key: "navigator.productSub", Value: navigator.productSub });

data.push({ Key: "navigator.vendor", Value: navigator.vendor });

data.push({ Key: "navigator.vendorSub", Value: navigator.vendorSub });

data.push({ Key: "window.outerWidth", Value: window.outerWidth });

data.push({ Key: "window.outerHeight", Value: window.outerHeight });

data.push({ Key: "window.innerWidth", Value: window.innerWidth });

data.push({ Key: "window.innerHeight", Value: window.innerHeight });

this.getData = function() {

return data;

}

this.getDataAsXml = function() {

var xml = "";

for (var i = 0; i < data.length; i++) {

xml += "<" + data[i].Key + ">" + data[i].Value + "</" + data[i].Key + ">";

}

return xml;

}

}

Tuesday 6 February 2018

Make REST service

Controllers inherit from ApiController

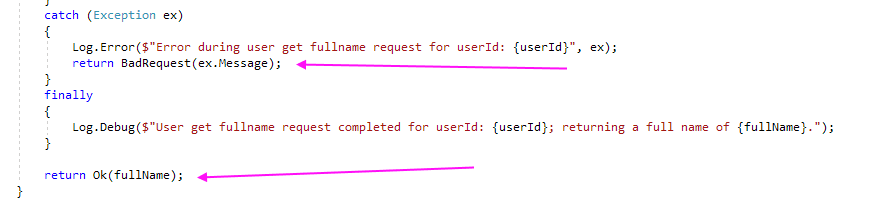

Methods return an IHttpActionResult

Use the helper stuff to return good or bad results

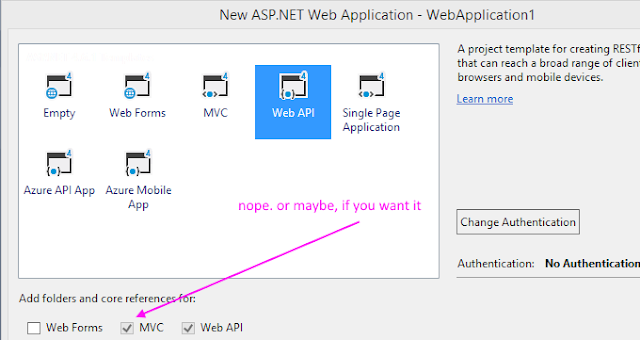

Creating a Web API project will include MVC stuff, but you don't need it. Can create an Empty project and just add in Web API. Include the MVC stuff to get views etc. You can include if you want to make a nice homepage for your REST service with some cat pictures on it?

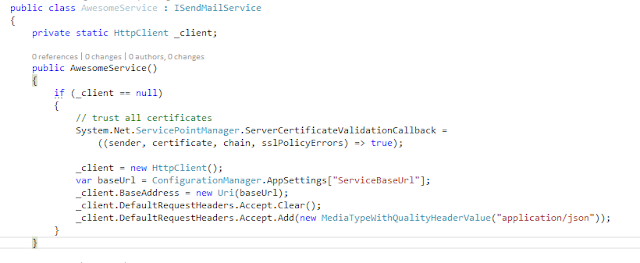

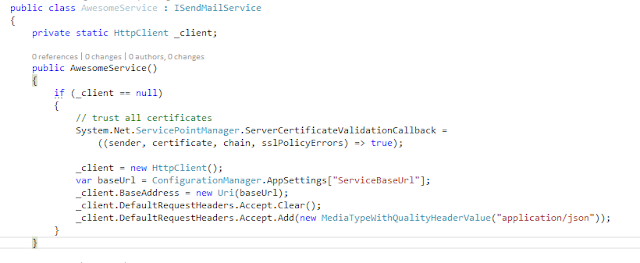

.Net consume REST using HttpClient

Create HttpClient and setup in the constructor

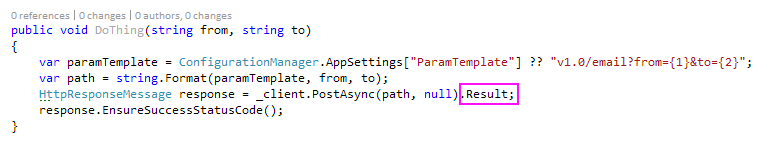

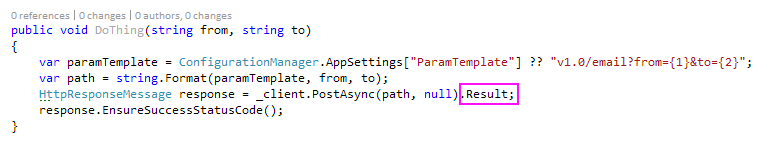

Make requests as required. Use .Result after the post to make life synchronous.

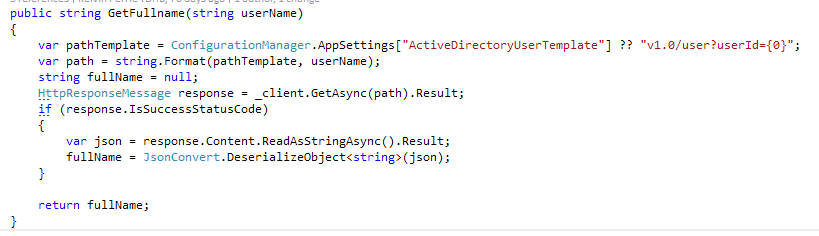

Pull info from the response if needed, use Newtonsoft.Json to deserialize to objects.

Stuff, stuff, stuff

public class AwesomeService : IAwesomeService

{

private static HttpClient _client;

public AwesomeService()

{

if (_client == null)

{

// trust all certificates

System.Net.ServicePointManager.ServerCertificateValidationCallback =

((sender, certificate, chain, sslPolicyErrors) => true);

_client = new HttpClient();

var baseUrl = ConfigurationManager.AppSettings["ServiceBaseUrl"];

_client.BaseAddress = new Uri(baseUrl);

_client.DefaultRequestHeaders.Accept.Clear();

_client.DefaultRequestHeaders.Accept.Add(new MediaTypeWithQualityHeaderValue("application/json"));

}

}

public void DoThing(string from, string to)

{

var paramTemplate = ConfigurationManager.AppSettings["ParamTemplate"] ?? "v1.0/email?from={1}&to={2}";

var path = string.Format(paramTemplate, from, to);

HttpResponseMessage response = _client.PostAsync(path, null).Result;

response.EnsureSuccessStatusCode();

}

}

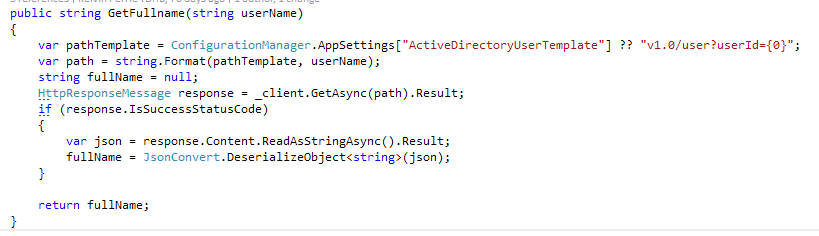

public string GetFullname(string userName)

{

var pathTemplate = ConfigurationManager.AppSettings["ActiveDirectoryUserTemplate"] ?? "v1.0/user?userId={0}";

var path = string.Format(pathTemplate, userName);

string fullName = null;

HttpResponseMessage response = _client.GetAsync(path).Result;

if (response.IsSuccessStatusCode)

{

var json = response.Content.ReadAsStringAsync().Result;

fullName = JsonConvert.DeserializeObject<string>(json);

}

return fullName;

}

Make requests as required. Use .Result after the post to make life synchronous.

Pull info from the response if needed, use Newtonsoft.Json to deserialize to objects.

Stuff, stuff, stuff

public class AwesomeService : IAwesomeService

{

private static HttpClient _client;

public AwesomeService()

{

if (_client == null)

{

// trust all certificates

System.Net.ServicePointManager.ServerCertificateValidationCallback =

((sender, certificate, chain, sslPolicyErrors) => true);

_client = new HttpClient();

var baseUrl = ConfigurationManager.AppSettings["ServiceBaseUrl"];

_client.BaseAddress = new Uri(baseUrl);

_client.DefaultRequestHeaders.Accept.Clear();

_client.DefaultRequestHeaders.Accept.Add(new MediaTypeWithQualityHeaderValue("application/json"));

}

}

public void DoThing(string from, string to)

{

var paramTemplate = ConfigurationManager.AppSettings["ParamTemplate"] ?? "v1.0/email?from={1}&to={2}";

var path = string.Format(paramTemplate, from, to);

HttpResponseMessage response = _client.PostAsync(path, null).Result;

response.EnsureSuccessStatusCode();

}

}

public string GetFullname(string userName)

{

var pathTemplate = ConfigurationManager.AppSettings["ActiveDirectoryUserTemplate"] ?? "v1.0/user?userId={0}";

var path = string.Format(pathTemplate, userName);

string fullName = null;

HttpResponseMessage response = _client.GetAsync(path).Result;

if (response.IsSuccessStatusCode)

{

var json = response.Content.ReadAsStringAsync().Result;

fullName = JsonConvert.DeserializeObject<string>(json);

}

return fullName;

}

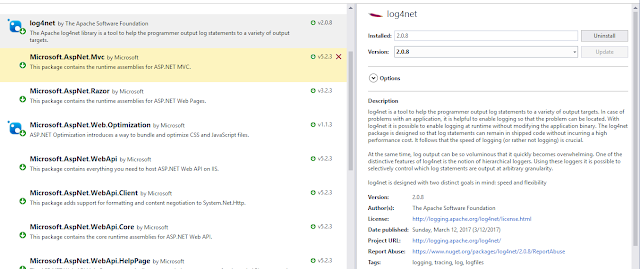

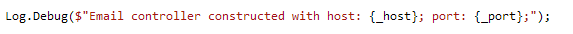

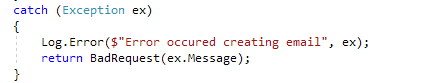

Configuring Log4net

reference:

https://stackoverflow.com/questions/10204171/configure-log4net-in-web-application

Add the nuget package:

Stick this line somewhere:

[assembly: log4net.Config.XmlConfigurator(Watch = true)]

(like the AssemblyInfo.cs file, or just cram it at the top of your controller like a madman - but only include it once)

Configure web.config settings:

<configSections>

<section name="log4net" type="log4net.Config.Log4NetConfigurationSectionHandler, log4net" />

</configSections>

<log4net debug="true">

<appender name="RollingLogFileAppender" type="log4net.Appender.RollingFileAppender">

<file value="RollingLog.txt" />

<appendToFile value="true" />

<rollingStyle value="Size" />

<maxSizeRollBackups value="5" />

<maximumFileSize value="10MB" />

<staticLogFileName value="true" />

<layout type="log4net.Layout.PatternLayout">

<conversionPattern value="%-5p %d %5rms %-22.22c{1} %-18.18M - %m%n" />

</layout>

</appender>

<root>

<level value="DEBUG" />

<appender-ref ref="RollingLogFileAppender" />

</root>

</log4net>

Get a reference to the logger

private static readonly ILog Log = log4net.LogManager.GetLogger(System.Reflection.MethodBase.GetCurrentMethod().DeclaringType);

Go crazy logging things

Output looks like

Versioning in REST

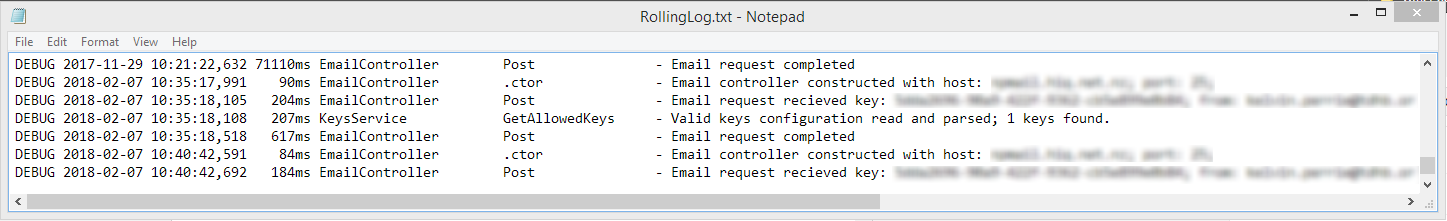

Details at

https://github.com/Microsoft/aspnet-api-versioning/wiki

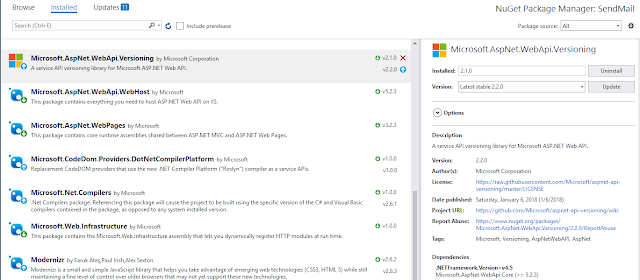

Add the nuget package

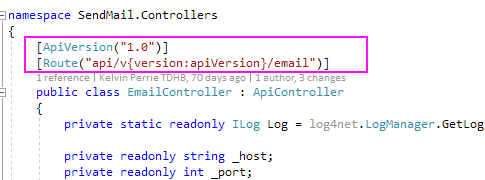

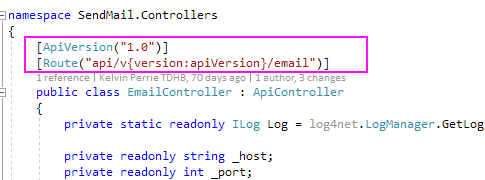

Decorate the controller

Access the API using the new path (described in the values that decorate the controller), e.g. http://whatever/api/v1.0/email

Edit:

The WebApiConfig should be updated automatiically(?) but sometimes isn't?

The error:

https://github.com/Microsoft/aspnet-api-versioning/wiki

Add the nuget package

Decorate the controller

Access the API using the new path (described in the values that decorate the controller), e.g. http://whatever/api/v1.0/email

Can test using Swagger. Install Swashbuckle nuget package. Run your project and go to your URL with swagger on the end, e.g.

http://localhost:10539/swagger

which will redirect to http://localhost:10539/swagger/ui/index

This lists your controllers.

Click the one you want to test and available actions are shown. The non-versioned one will not work.

Click the one with the version in the URL. Enter params where required and click 'try it out!'.

Confirm the response is ok.

Edit:

The WebApiConfig should be updated automatiically(?) but sometimes isn't?

The error:

The inline constraint resolver of type 'DefaultInlineConstraintResolver' was unable to resolve the following inline constraint: 'apiVersion'

Mean you need to resolve the apiVersion value in WebApiConfig; it should look something like

// Web API routes

var constraintResolver = new DefaultInlineConstraintResolver()

{

ConstraintMap =

{

["apiVersion"] = typeof( ApiVersionRouteConstraint )

}

};

config.MapHttpAttributeRoutes(constraintResolver);

config.AddApiVersioning();

config.Routes.MapHttpRoute(

name: "DefaultApi",

routeTemplate: "api/{controller}/{id}",

defaults: new { id = RouteParameter.Optional }

);

Mean you need to resolve the apiVersion value in WebApiConfig; it should look something like

// Web API routes

var constraintResolver = new DefaultInlineConstraintResolver()

{

ConstraintMap =

{

["apiVersion"] = typeof( ApiVersionRouteConstraint )

}

};

config.MapHttpAttributeRoutes(constraintResolver);

config.AddApiVersioning();

config.Routes.MapHttpRoute(

name: "DefaultApi",

routeTemplate: "api/{controller}/{id}",

defaults: new { id = RouteParameter.Optional }

);

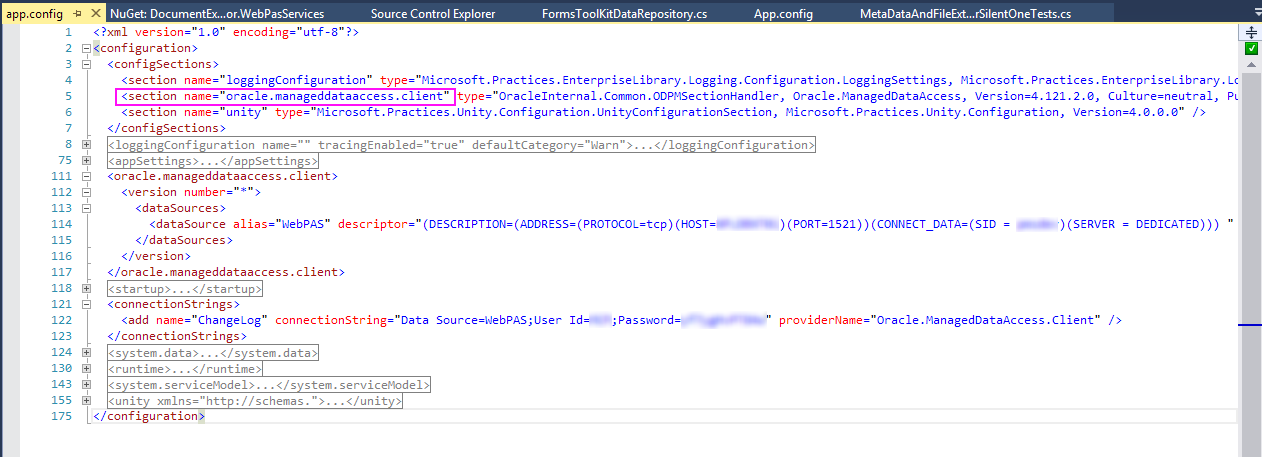

Oracle.ManagedDataAccess - accessing Oracle from .Net without having to install any Oracle client

"ODP.NET, Managed Driver is a 100% native .NET code driver. No additional Oracle Client software is required to be installed to connect to Oracle Database."

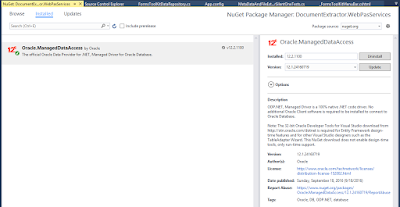

Add this nuget package:

Stuff gets added to config; configure connection

Have had a problem when multiple connection strings with them not reading from the aliased data source. Can skip the datasource config elements and put it directly into the connection string.

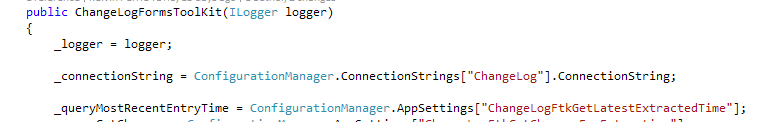

Accessing the connection string as per standard

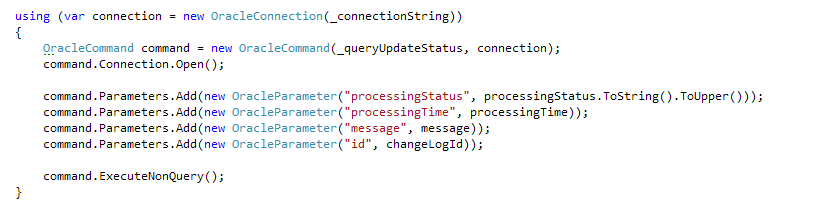

Use the OracleCommand same way as SqlCommand. To issue command (e.g. update):

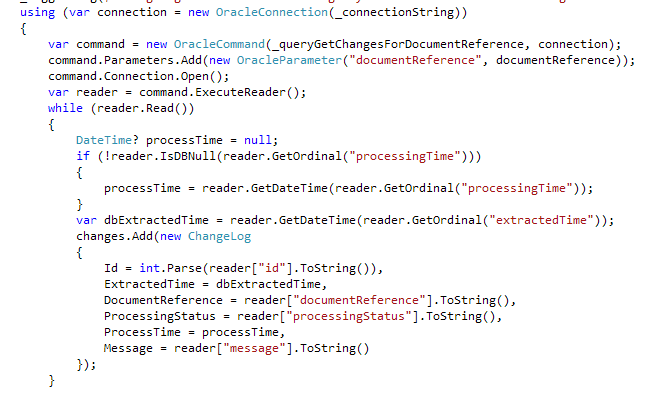

or to read data (e.g. select):

Parameters in the query are prefixed with colon:

NOTE: Ensure that the params added in the code (e.g. using the command.Parameters.Add) are done in the same order as they occur in the query. Even though a name is specified when adding a parameter, by default that is ignored.

TFS 2017 build & release with config transforms

I love config transforms. I do not love TFS environment variables.

These are notes on how to have two builds (debug and release) but multiple target environments (e.g. dev, test and prod) with different web.config settings applied during the release steps (which is done by web.config transforms).

The high level process is:

These are notes on how to have two builds (debug and release) but multiple target environments (e.g. dev, test and prod) with different web.config settings applied during the release steps (which is done by web.config transforms).

The high level process is:

- Setup a debug and release build. The debug build of the code goes to dev server, the release build goes to test and prod servers.

- During the release to each environment the config transforms are applied to ensure the config is relevant to the environment it is going to (e.g. connects to right database/webservices, has right app settings)

- The code going to both test and prod is identical (with an altered config).

- You don't have to do a build for each environment. You can just do a debug and release build which is quicker if you have a lot of environments

- You don't have to change the project file to add in a transform step. That step will actually apply to local builds which is a pain (you can create a 'localdebug' config and use that local but who can be bothered). Although I guess you could do an XDT Transform step during your build without changing the proj file (haven't tried this, but should work).

Do the build

Setup the BuildConfiguration to do a debug and release build by updating BuildConfiguration variable and turning on multi-configuration.

Configure artifact names so the different builds can be distinguished:

This means two builds happen:

Wow.

Do the release

The artifact can be copied to the final website location (e.g. the IIS directory) and the config transform applied there, but there will be a short time where the code will be deployed and the transform not applied - for that time users accessing will get the site with non-transformed (e.g. development) config. So process is:

- stick the artifact files in a temp/working directory

- do the transform for the environment

- move the files to the final website directory

Create release definition(s)

Copy the files from the artifact to a working directory (create the directory manually (or add a step to do it) first or this copy step will fail)

Apply the config transform to the files in the working directory (this comes from the marketplace https://marketplace.visualstudio.com/items?itemName=qetza.xdttransform#overview)

How does this work when it doesn't have any credentials associated with the step? Dunno. Maybe because the previous copy files step does pass credentials, so the process is already considered authorised on the remove machine.

Copy the files from the working directory to the website directory

Optional: delete the config transforms and clean the working directory:

You have to get the remote delete step from the marketplace. There is a bug with it where if your path has a space you need to wrap it in single quotes.

Subscribe to:

Posts (Atom)